Uni3D: Exploring Unified 3D Representation at Scale

ICLR 2024 Spotlight / Authors from Beijing Academy of Artificial Intelligence, Tsinghua U, Peking U

https://github.com/baaivision/Uni3D

Abstract

- 이미지/텍스트 representation 학습은 대규모 스케일링 덕분에 폭발적으로 발전함, 3d 객체/장면을 대규모로 스케일링한 연구는 거의 없음

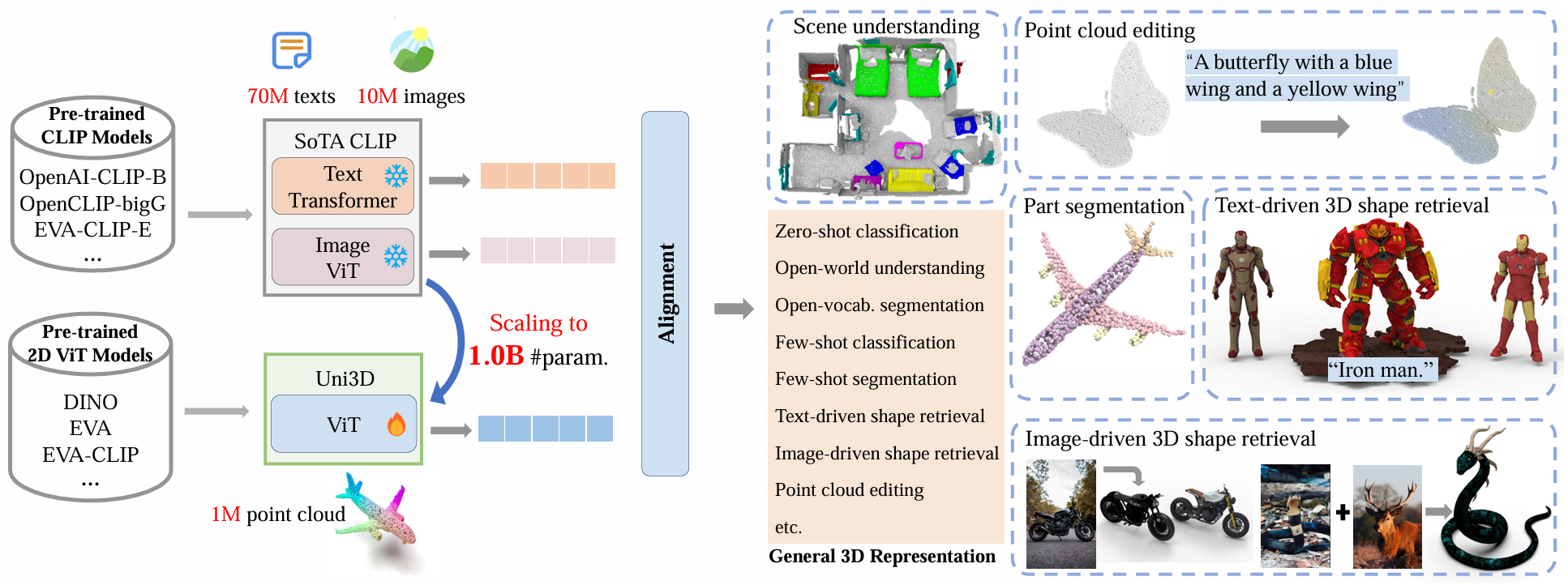

- 본 연구에서는 Uni3D를 제안함 - 통합 3d 표현 능력을 가진 3d foundation 모델

- pretrained ViT를 초기화상태로 사용

- 3D 포인트 클라우드 특징을 이미지-텍스트 정렬된 feature space로 end-to-end 정렬

- → 2d 세계에서 이미 학습된 표현력을 3d 세계로 끌어오는 구조

- “매우 큰 모델로 성능 혁신을 이룬 이미지/텍스트 분야처럼, 3d에서도 큰 모델로 scaling up, 성능을 개선해보자!”

- 이를 통해서, 2d 모델의 사전학습된 지식을 활용하고, clip 등 multimodal 모델의 의미 공간을 활용 가능함 → 3d representation 스케일 업

- 단순한 아키텍쳐, 대신 파라미터 수를 1B까지 확장 → 스케일 증가와 함께 3D 표현 능력이 계속 향상됨

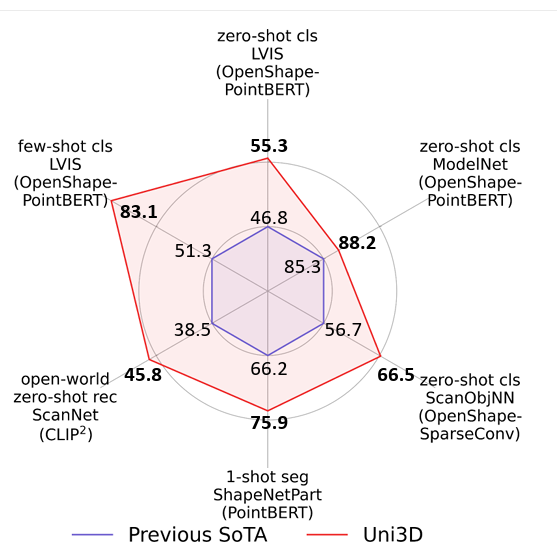

- task: zero-shot classification, few-shot classification, open-world understanding, part segmentation에서 새로운 기록을 달성함

Introduction

- 3D 표현 학습의 중요성

- 하지만 기존 3D 연구는 작은 스케일에 머물러있음

- 방법론

- 3d encoder는 2d ViT로 초기화함

- 3d point cloud 피처를 image-text 피처 공간에 정렬함

- 아키텍처와 pretext task가 단순함

- 2d 모델을 초기화해서 쉽게 사용 가능함

- clip/blip 계열 image-text aligned 모델을 타깃으로 사용 가능

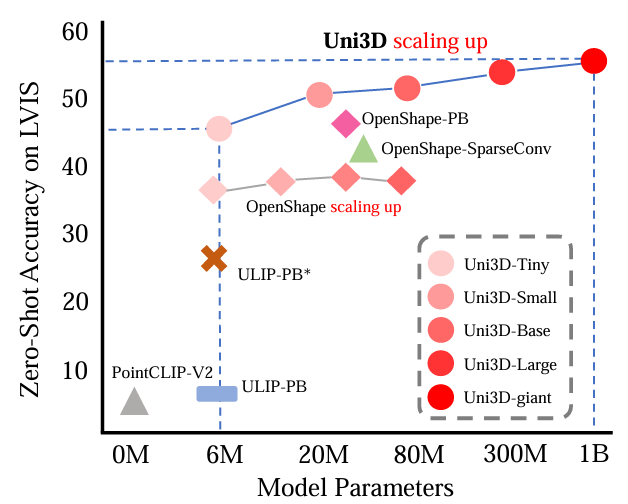

- 스케일링 실험

- 세 방향으로 스케일 확장

- 모델 크기 확장: 6M → 1B

- 초기화 소스 확장: visual self-supervised → text-supervised

- 타깃 멀티모달 모델확장 : 150M → 5B

- 모든 방향에서 스케일을 키울수록 성능이 지속 상승함을 발견함

- 세 방향으로 스케일 확장

- 결과

- modelNet zero shot 성능 88.2%, 일부 supervised 방법에 필적하는 성능임

- few-shot, part segmentation, open world understanding 등 여러 task에서 sota 달성

- 그 외 task에 대해서 응용이 강력함

⇒ “2D·언어 세계에서 scaling이 혁신을 만든 것처럼, 3D에서도 scaling이 성능을 폭발적으로 향상시킨다”는 사실을 처음으로 거대 규모 실험으로 입증

Method

3.1. Unified 3D representation

- uni3d는 2d ViT 구조를 그대로 3d에 가져오는 것이 핵심임

- 백본은 vanilla transformer고, 3d 입력을 ViT가 처리할 수 있는 토큰 형태로 바꿔주는 부분만 교체함

- patch embedding → point tokenizer로 교체!

- 백본은 vanilla transformer고, 3d 입력을 ViT가 처리할 수 있는 토큰 형태로 바꿔주는 부분만 교체함

point tokenizer- FPS → KNN → PointNet → Transformer → 3d repr

- FPS를 통해 대표 포인트를 샘플링함

- KNN 알고리즘을 통해 각 대표 포인트 주변의 이웃 포인트를 그룹핑해서 하나의 로컬 영역이 3D 패치가 되도록 함

- tiny pointNet 인코더

- 각 3d 패치에서 특징 벡터를 추출

- 역할?

- 기존 transformer의 입력 형식

- [토큰1, 토큰2, 토큰3, …]

- 각 패치는 다음과 같음

- patch1 = (점 1, 점 2, 점 3, …) → N개 점 (개수 일정하지 않음) patch2 = (점 1, 점 2, 점 3, …) → 또 다른 N개 점 …

- 점 수를 일치시키고, 공간 구조를 보존하기 위해서 pointNet을 거치는 과정이 필요함

- 기존 transformer의 입력 형식

- ViT에서의 패치 임베딩과 동일한 역할을 하도록!

- 그 다음 이 3d 토큰들이 transformer에 입력됨 → transformer → 3d representation 추출

Scaling up Uni3D

- 기존 연구들의 3D scaling up 실패 시도들..

- 대부분 소규모 데이터셋 기반, 작은 모델 규모에 머무름

- 연구 포커스가 모델 아키텍쳐 설계에 집중함

- objaverse 등장 이후에 스케일링 시도한 연구들이 있었으나, 백본이 너무 작음

- 원인?

- 3D 백본이 통일되어 있지 않음 / 일관된 스케일링 전략 적용할 수 없음

- 일부 백본은 포인트 전체에서 로컬 패턴을 직접 모델링하는 방식 (DGCNN, PointMLP 등)

- 모델이 커질수록 계산량이 폭발적으로 증가 → 스케일 확장이 사실상 불가능함

- Uni3d의 접근방법

- 다른 3d 백본은 각 구조마다 다른 스케일 전략이 필요함

- Uni3d는 ViT 구조를 그대로 사용 → 이미 검증된 스케일업 전략을 사용 가능함

- ViT가 확장하는 방식 그대로 Uni3d를 확장시킴

- Tiny (6 M), Small (23M), Base (88 M), Large (307 M), Giant (1B)

- 단순하게 ViT를 큰 버전으로 교체하는 방식으로 스케일 업을 시킴

- 실험 결과를 통해서, 모델 크기를 키울수록 지속적으로 성능이 상승함을 확인

- <scale = 성능>이 3d에서 성립함을 실증

- 계산 효율성과 학습 안정성도 유지됨

- 최종 성과

- 1B 파라미터의 3d representation model을 최초로 구축함

- 100만개의 3d shape, 1천만개 이미지, 7천만개 텍스트로 멀티모달 alignment 학습

- 여러 downstream task에서 강력한 전이 성능 확인함

Initializing Uni3D

- 기존 3d 사전학습에서 나타나는 또 다른 문제

- 모델을 크게 만들면: overfitting, 수렴 불안정 등 백본 학습이 어려움!

- 이를 해결하기 위한 방법으로 3d 전용 pretext task로 사전학습을 먼저 하는 것

- 한계: 사전학습 비용이 큼, 데이터 스케일이 작아서 강력한 prior를 만들기 어려움

- Uni3D의 접근방법

- 3d 백본을 ViT로 사용하기 때문에 3D 전용 사전학습 할 필요가 없음

- 이미지/멀티모달에서 이미 학습된 거대 사전학습 모델을 그대로 초기화 지점으로 사용

- 이미 학습한 대규모 지식, 강력한 표현 능력을 leverage하는 것

- ex. 2d self-supervised (dino, eva 등), text-image 정렬 모델 (clip 등)

- 어떤 transformer 모델을 가져와도 사용할 수 있음!!

- 한마디로 pretrained vit에서 학습을 시작해서 3d 세계에 적용 가능하도록 finetuning하는 것

- 이를 통해,

- 대형 3d 백본에서도 overfitting, 학습 불안정 현상이 크게 완화

- 거대한 모델 규모에서도 cross-modal contrastive learning이 수월해짐

3.2. Multi-Modal Alignment

- ulip, openshape의 패러다임과 유사하게 language, image, point cloud 사이의 멀티모달 정렬을 학습하는 것을 목표로 함

Datasets

- 동일한 조건에서 비교하기 위해서, openshape이 제공한 앙상블 3d 데이터셋을 그대로 사용해 학습함

- objaverse, shapeNet, 3D-FUTURE, ABO

- 4개의 데이터셋을 합쳐 거대 3d 데이터셋으로 사용

- 전처리

- pc 10000개 샘플링 (rgb 포함)

- 10개의 렌더링 이미지 생성

- openshape과 동일하게 triplet을 구성하였음

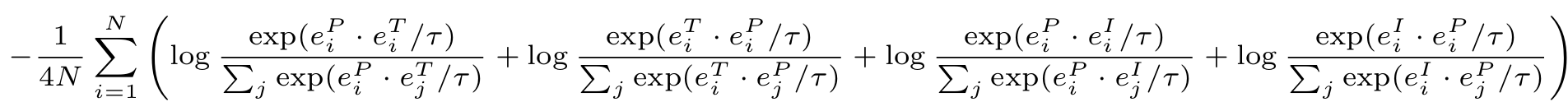

Objective

- 학습 목표: 3d 포인트 클라우드 특징을 clip의 이미지-텍스트 특징 공간과 정렬하도록 3d 인코더 f_p를 학습시키는 것

- 학습 대상: 🔥3d encoder only, ❄️image/text encoder는 학습 x

- 입력: triplet (pc, image, text)

- 피처 정규화 l2 normalization → e_p, e_i, e_t를 만듦

- 코사인 유사도를 직접 dot product로 사용할 수 있게 됌

- 총 4개의 정렬 목표 (openshape, ulip2와 동일함)

- 3d를 고정, 텍스트를 변화: 정답 텍스트와 가까워지고, 오답 텍스트와 멀어짐

- 텍스트를 고정, 3d를 변화: 정답 3d와 가까워지고, 오답 3d와 멀어짐

- 3d를 고정, 이미지를 변화: 정답 이미지와 가까워지고, 오답 이미지와 멀어짐

- 이미지를 고정, 3d를 변화: 정답 3d와 가까워지고, 오답 3d와 멀어짐

Image-Text aligned target

- uni3d는 특정 clip 모델에 종속 x 어떤 clip teacher를 사용할 수 있음

- teacher CLIP이 커질수록 Uni3D alignment가 더 강력해지고 성능도 상승

Experiment

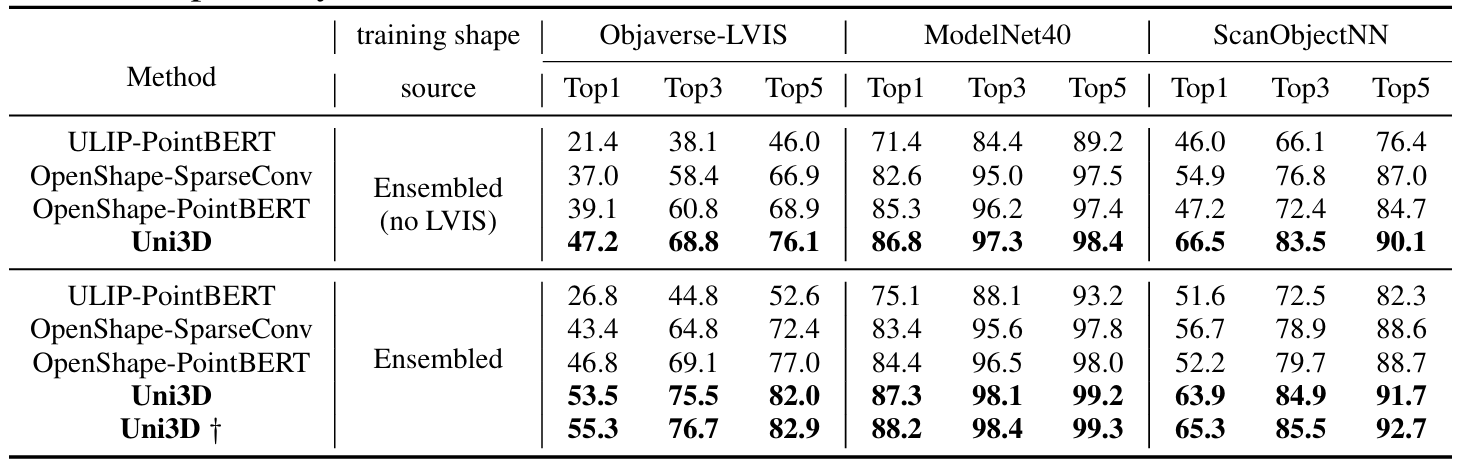

4.1. Zero-shot Shape Classification

- 데이터셋: ModelNet (15 카테고리), ScanObjNN (40), Objaverse-LVIS (1,156)

- openshape의 세팅을 따름

- objaverse-lvis: 10,000 colored point 샘플링

- ModelNet40: 10,000 포인트 샘플링, 색은 x

- ScanObjNN: 색 없는 2048 포인트 샘플링, obj_only version

- 베이스라인 모델: PointCLIP, PointCLIP V2, ULIP, OpenShape

- PointCLIP, PointCLIP V2: 포인트 클라우드를 이미지처럼 투영해서 2d cilp으로 직접 분류

- ULIP, OpenShape: 3d 백본을 학습한 후 3d → clip에 정렬

- “ensembled”: 4개의 3d 데이터셋으로 학습

- “ensembled no LVIS”: 위 데이터에서 LVIS 데이터를 제외한 버전

- 앙상블 버전과 no lvis 버전 둘다 uni3d가 기존 sota를 명확하게 능가함

- † 기호는 각 벤치마크(평가 데이터셋)에서 해당 모델이 기록한 최고 성능을 표시라는데.. 더 큰 모델을 의미?

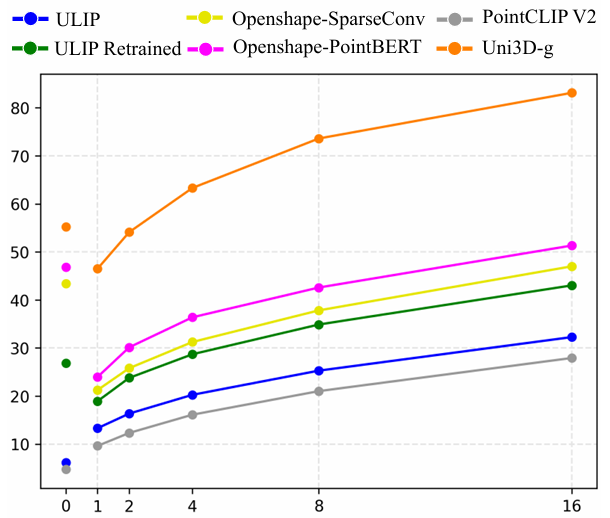

4.2. Few-shot Linear Probing

- linear probing?

- 모델의 표현력을 평가하기 위한 표준 방식

- 방법

- 학습한 representation model (여기서는 uni3d)는 freeze

- 적은 labeled 데이터만으로 linear classifier를 학습

- representation이 얼마나 잘 학습 된건지를 테스트함 (별도의 모델 학습은 x)

- 가정: representation이 좋을수록 적은 labeled 데이터만으로 선형 classifier가 높은 정확도를 낼 수 있음 → few-shot 성능을 측정하는 데에 적합하다

- objaverse-lvis에 대해서 수행

- 클래스 당 라벨이 1, 2, 4, 8, 16개 있는 few-shot 환경

- 1-shot 설정이면 → 각 카테고리당 labeled 샘플이 1개만 제공됨

- zero-shot은 few-shot과 다르게 텍스트 임베딩과의 유사도 비교 방식, few-shot은 linear classifier 학습 기반 평가

- 결과적으로, 모든 few-shot 설정에서 uni3d가 다른 베이스라인 모델을 큰 폭으로 능가함

- 적은 라벨 데이터 환경에서도 뛰어난 전이 성능을 가짐

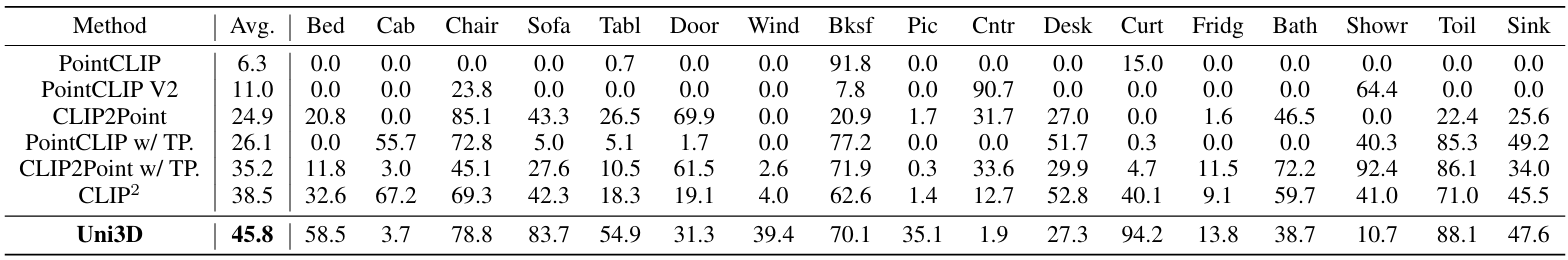

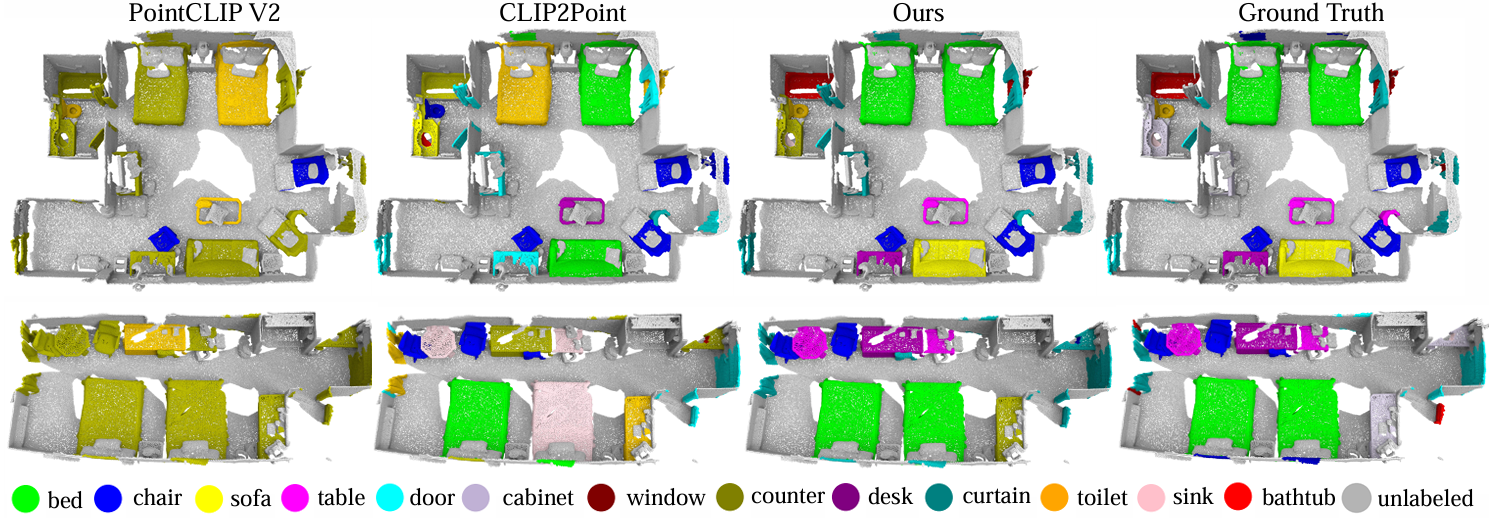

4.3. Open-World Understanding

- uni3d가 실제 세계의 3d 장면과 물체를 얼마나 잘 이해할 수 있는지 평가함

- 데이터셋: ScanNet- 실세계에서 스캔된 실내 장면 1500개로 구성된 대규모 3d 데이터셋

- 목표: 각 객체 instance의 카테고리를 zero-shot 방식으로 인식

- instance segmentation x, category classification만 평가함

- 기존 방법 중 다수는 실세계 데이터로 추가 훈련됨 (TP 붙어있는 것들 - real-world point cloud-image-text triplets로 추가학습함)

- 근데 uni3d는 실세계 데이터를 한번도 보지 않고 합성 데이터만으로 훈련했는데 가장 zero-shot 성능이 높음 ⇒ 실세계 3D generalization 능력을 갖추고 있음

- why?

- uni3d는 clip의 대규모 real-world 멀티모달 지식을 가져옴 → 강한 실세계 일반화 능력을 갖추었기 때문

- 대규모 스케일링된 모델 덕분에 표현 capacity가 크다

- instance segmentation된 결과가 이미 제공이 되고, 각 instance를 zero-shot으로 분류한 결과

4.4. Open-Vocabulary / few-shot part segmentation

- [part segmentation]

- 2d 분야에서는 clip의 vision-language 지식을 downstream task에 전이하면 해당 task의 성능이 좋아진다는 연구가 이미 존재함 - 하지만 3d에는 그런 연구 거의 없음

- 3d에서도 clip 기반 표현을 통해 part segmentation 성능을 끌어올릴 수 있음을 보여주기 위함

- 데이터셋: shapenet

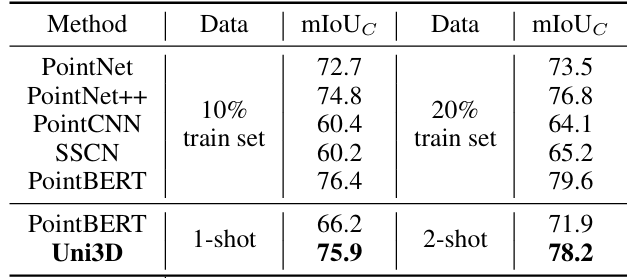

1-shot, 2-shot의 실험 결과 비교

- 1,2-shot의 경우에 pointBERT를 큰 차이로 이김

- 베이스라인의 학습 데이터셋을 10~20%로 늘림 → 그래도 uni3d가 거의 대부분 더 우수하더라~~

- 즉, 다른 모델들은 10~20%의 라벨 데이터를 필요로 하는 수준의 성능을 uni3d는 1-2 shot 만으로 달성함

→ 그정도로 uni3d의 표현력이 강력해서 task-specific supervision이 적어도 task를 잘 수행가능

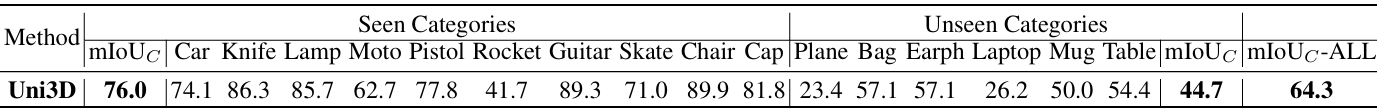

- [open-vocabulary part segmentation]

- 미리 본 적 없는 part 이름에 대해서도 파트 수준의 의미를 이해하고 segmentation할 수 있는지를 평가하는 실험

- uni3d가 local 3d geometry + semantic cue를 세밀하게 이해하는지

- 3d part-level 개념을 open vocab으로 일반화할 수 있는지

- 객체 내부의 세부 part 의미까지 open vocab으로 일반화할 수 있는지를 확인

- shapenet 데이터셋을 seen과 unseen으로 나눔 (카테고리 기준 seen과 unseen이 있는 것)

- “Uni3D는 일부 part 이름은 학습 중에 보고, 나머지 part 이름은 단 한 번도 본 적 없이 테스트 시 처음 본다”

- 결과적으로 seen에서는 물론, unseen에서도 강력한 성능을 보임

- clip에서 증류된 실세계 지식 덕분에 객체 전체의 semantic뿐만 아니라 파트 수준의 정교한 local 3d 패턴까지 표현 내부에 학습해버림…

⇒ Uni3D는 open vocab 3d part 인식을 할 수 있는 첫 3d foundation backbone

- 미리 본 적 없는 part 이름에 대해서도 파트 수준의 의미를 이해하고 segmentation할 수 있는지를 평가하는 실험

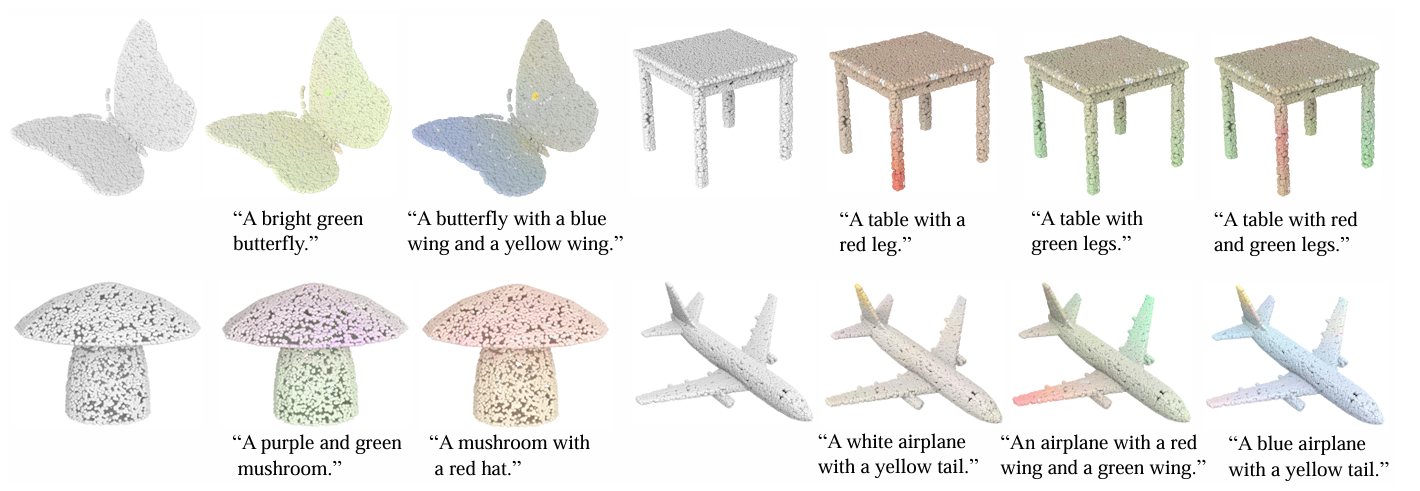

4.5. Point cloud painting

- 3d 객체의 세밀한 semantic 패턴을 얼마나 잘 이해하고 있는지를 보여주는 새로운 사례를 제시함

- point cloud painting: 텍스트 프롬프트에 맞게 pc에 색상을 최적화하는 작업

- pc의 임베딩과 text 프롬프트의 임베딩 유사도가 최고가 되도록 rgb값을 최적화

- 바뀌는 대상은 pc의 rgb값

- 결과를 보면, prompt가 포함하는 복잡한 의미를 반영해서 색을 입힐 수 있음

- uni3d가 contrastive learning을 통해서 프롬프트 단위의 의미 구조까지 학습했음을 보여줌

4.6. Cross-modal Retrieval

- 검색에 멀티모달 임베딩 활용 ← 내가 하고 있는 applications!!

- 이미지, 텍스트, 3d를 한 공간에서 비교가능하기 때문에, 3d shape을 검색할 수 있음

- 이미지 → 3d 검색

- 학습 데이터는 실사인데, 실세계 데이터에 대해서도 잘 동작함~~

- 첫번째 열은 image to 3d 검색

- 두번째 열은 두 장의 이미지를 넣고 임베딩 평균으로 검색

- 여러 signal을 취합할 수 있음을 보여줌

- 세번째 열은 text-to-3d 검색

4.7. Ablation Study

- 기본 세팅

- 3d backbone: vit base

- backbone 초기화 weight: EVA pretrained weights

- CLIP: EVA-CLIP-E

- 학습 데이터: Ensembled (no-LVIS)

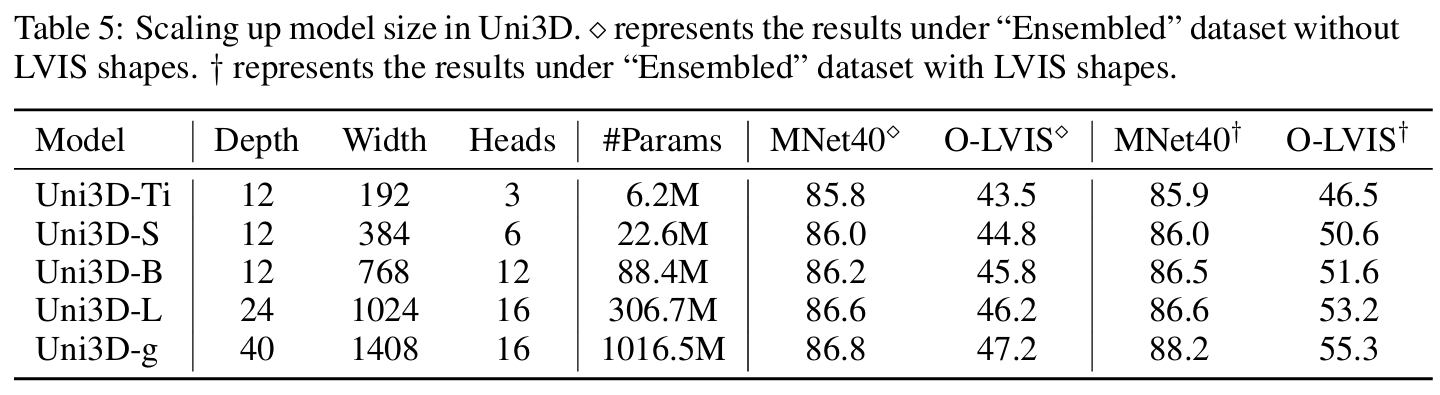

- Scaling up Model size

- 모델 크기를 키우면 성능이 얼마나 좋아지는가?

- 구조적으로 ViT와 동일한 트랜스포머를 사용함

- 앞서 언급한 tiny, small, base, large, giant 5버전 (이미지에서 사용한 그대로)

- 결과적으로 모델 규모가 커질수록 성능 향상

- 특히 giant 모델의 경우는 이전 3d 연구에서는 불가능한 수준의 representation 성능을 보여줌

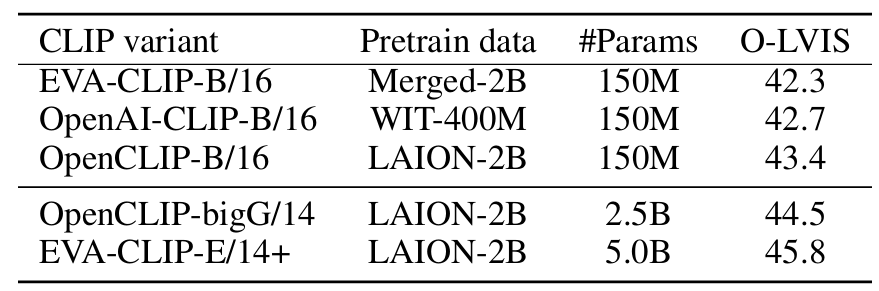

- Switching / scaling up CLIP teachers

- uni3d의 성능이 어떤 clip 모델을 사용하느냐에 따라서 얼마나 달라지는가?

- clip이 강력할 수록 uni3d도 강력해지는가?

clip 대신 openai-clip, openclip, eva-clip 등 대규모 clip (openclip-bigG, eva-clip-e ..)

- clip이 강하면 uni3d 성능이 좋아짐 → 가장 큰 크기의 clip (가장 밑 행)을 사용했을 때 최고 성능

- teacher가 강할수록 3d encoder에게 전달되는 semantic signal도 더 정교하고 풍부해짐

- clip 모델이 발전함에 따라서 모델을 갈기만 하면 uni3d도 함께 성능이 향상될 수 있다는 가능성을 보여줌

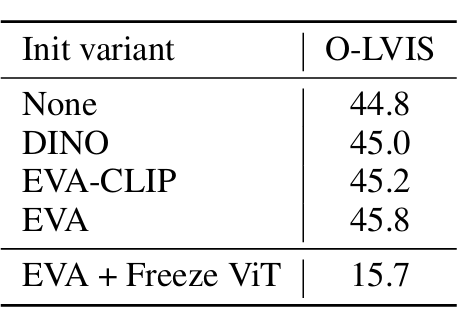

- Initializing Transformer

- uni3d를 어떤 방식으로 초기화하느냐가 성능에 어떤 영향을 주는가?

- 초기화 x, 2d pretrained vit (DINO, EVA), 멀티모달 clip (EVA-CLIP), EVA + freeze vit - EVA로 초기화하되 backbone을 freeze, fine-tuning 없이 사용

DINO,EVA-CLIP,EVA세 가지 모두 초기 가중치로 사용한 뒤 Uni3D 방식으로 다시 학습(fine-tuning)한 결과- eva가 가장 좋음, eva + freeze vit가 가장 낮음 → 2d pretrained 백본을 고정해서 쓰면 안됨 (fine tuning이 필수)

Conclusion

- uni3d는 3d 모델을 1b 단위 규모로 확장한 통합 프레임워크임

- 3d 네트워크 구조를 새로 설계하는게 아니라 ViT를 그대로 가져와서 백본으로 사용함

- vit 구조를 사용하는 이점?

- 2d에서 이미 확립된 scaling up 전략을 그대로 사용할 수 있음

- 2d pretrained 가중치를 초기화로 사용 가능함

- vit 구조를 사용하는 이점?

- 학습 데이터는 1m 3d, 10m 이미지, 70m 텍스트의 대규모 데이터를 사용함

- pc feature를 이미 잘 정렬된 image-text 피처 공간에 잘 정렬하려고 함

- 여러 task에 있어서 SOTA를 찍음 → 3D 멀티모달 foundation model임